How to find duplicate lines of text in file by the sort and uniq count command? useful to show duplicate text content in files.

When editing text or configuration files in the Linux shell, there can often be the requirement that duplicate text content in files occur only once. To check how many times a line was duplicated, especially in files with a larger number of lines. This does not have to be done manually, help provide the use of the filters sort and uniq with count to write sorted concatenation of text lines.

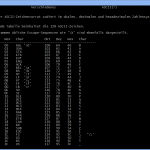

This command counts duplicate lines of text in FILE and sorts the output in the Linux bash.

$ sort FILE | uniq --count Replace theFILEplaceholder with the real file name.

Next only duplicate lines of text in FILE should be shown.

$ sort FILE | uniq --count --repeatedNothing is displayed if there are no duplicate lines of text in the FILE.

using sort and uniq for lines of text

sort write sorted concatenation of all FILE(s) to standard output.

The “sort” command provides various options that can be used to customize the sorting process and file(s) that need to be sorted. If no FILE is specified, the “sort” command will sort the input from the standard input.

Here are some frequently used options with the “sort” command in Linux:

-b, --ignore-leading-blanks

ignore leading blanks

-h, --human-numeric-sort

compare human readable numbers (e.g., 2K 1G)

-k, --key=KEYDEF

sort via a key; KEYDEF gives location and type

-n, --numeric-sort

compare according to string numerical value

-o, --output=FILE

write result to FILE instead of standard output

-r, --reverse

reverse the result of comparisons

-t, --field-separator=SEP

use SEP instead of non-blank to blank transition

-u, --unique

with -c, check for strict ordering; without -c, output only the first of an equal rununiq report or omit repeated lines, is a filter adjacent matching lines from standard input, writing to standard output.

Here are some frequently used options with the “uniq” command in Linux

-c, --count

prefix lines by the number of occurrences

-d, --repeated

only print duplicate lines, one for each group

-D print all duplicate lines

-f, --skip-fields=N

avoid comparing the first N fields

-i, --ignore-case

ignore differences in case when comparingConclusion

In this post, you will learn how to find duplicate content in a file by using the linux command sort and uniq for sorting and counting. It is useful for finding duplicate text content in files.